How to build an event-driven architecture with Fluvio

Mastering Event-Driven Design with Fluvio

Introduction

Get started on a journey into the world of event-driven architecture with Fluvio. This powerful platform offers a streamlined approach to building real-time, scalable, and resilient applications. By leveraging Fluvio's capabilities, you can unlock the full potential of event-driven design and create innovative solutions that meet the demands of today's dynamic environments.

In this guide, we'll delve into the intricacies of Fluvio, exploring its key features, benefits, and practical implementation strategies. You'll learn how to utilize the power of event-driven architecture to build applications that are responsive, scalable, and efficient.

Some information

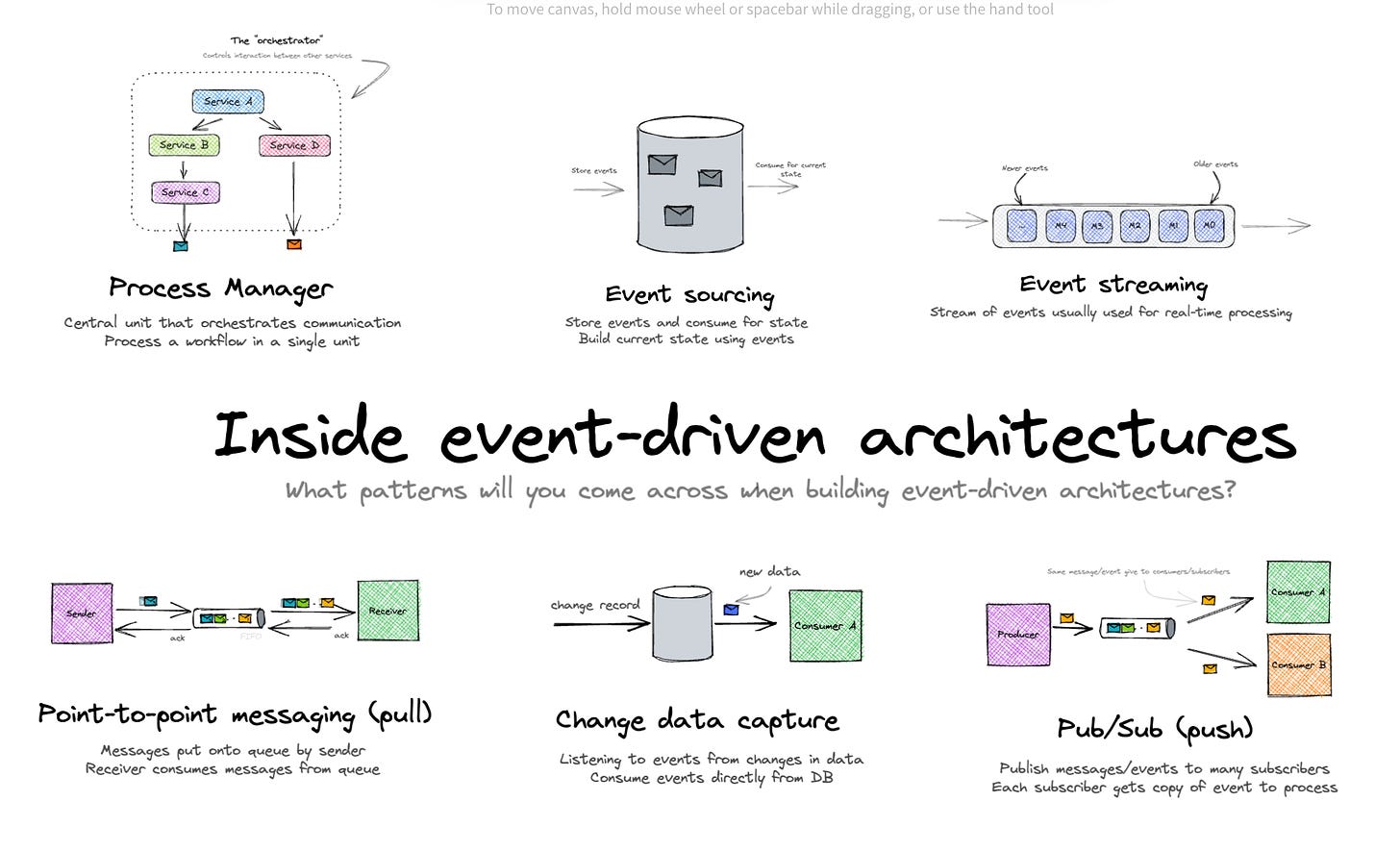

Event-driven architecture

Imagine you're hosting a party. You want to notify everyone when the pizza arrives. Instead of shouting to each guest individually, you could simply announce it once, and everyone who's interested in pizza will hear and react accordingly.

This is essentially the concept of event-driven architecture. It's a design pattern where components of a system communicate by producing and consuming events. Think of it as a way to create a more dynamic and responsive system, similar to how your party guests react to your announcement.

Now, let's introduce Pub/Sub.

Imagine you're the party host (the publisher). When the pizza arrives, you publish an event called "Pizza Is Here.". Your guests (the subscribers) can subscribe to this event. When they hear your announcement (the event), they'll take action (e.g., grab a slice).

In a pub/sub system, the publisher sends out events, and subscribers can choose to listen to specific events. This decouples the components, making the system more scalable, flexible, and resilient.

Here's a more technical breakdown:

Publisher: Produces events and sends them to a message broker.

Message Broker: Stores and distributes events to interested subscribers.

Subscriber: Consumes events and takes appropriate actions.

Imagine a social media platform. When a user posts a new message, that's an event. Other users who follow that user can subscribe to their posts and receive notifications whenever a new message is published.

Key benefits of Pub/Sub:

Scalability: handles large volumes of events efficiently.

Flexibility: Allows for dynamic subscriptions and decoupled components.

Resilience: Ensures messages are delivered even if components fail.

Real-time updates: Enables real-time communication and updates.

Note: I found an interesting video that can help you easily understand the concept; here is the link.

Fluvio

Fluvio's exceptional performance and efficiency make it a standout choice for real-time data processing. Its low-latency capabilities ensure that data is processed swiftly, enabling applications to respond to events in a timely manner. Furthermore, Fluvio's lightweight design and optimized architecture minimize resource consumption, making it suitable for even the most resource-constrained environments.

Fluvio's rich API support and customizable stream processing capabilities make it a developer's dream. With client libraries available for popular programming languages, you can easily integrate Fluvio into your existing applications. The platform's programmability allows you to tailor data processing pipelines to meet your specific requirements, ensuring maximum flexibility and control.

Moreover, Fluvio's WebAssembly integration enables you to securely execute custom stream processing logic, providing a powerful and efficient way to extend the platform's capabilities.

Code in Action

Note: the machine that I am using is Ubuntu/Linux.

Setup Environment

Remember that Fluvio is built in Rust, we have to install Rust so that the library functions well. In addition, Fluvio offers several options for setting up environments: local, docker, kubernetes and cloud. In this experiment, I prefer Docker to others.

Note: You can go to this to install: https://www.fluvio.io/docs/fluvio/installation/

Fortunately, Fluvio has written a Docker for us already. We just need to copy the Dockerfile and the docker-compose.yaml into our directory.

To run the Docker file, simply type:

docker compose up

After that, open another terminal and type this to create a topic:

fluvio topic create quote-daily

Note: we can check our topics with the command line fluvio topic list .

Define Pub/Sub and generate quote method

We define what is the object that we want to communicate. In this situation, it is a quote.

# ./schema.py

from datetime import datetime

from dataclasses import dataclass, field

@dataclass

class Quote:

quote: str

author: str

created_at: datetime = field(default_factory=datetime.now)

def display(self):

return f"{self.quote} - {self.author}"

The generate_quote method is simply defined as fetching a public API that randomly generates a quote.

# ./quote.py

import requests

from json import loads

from schema import Quote

def generate_quote(url="<https://zenquotes.io/api/random>") -> Quote:

response = requests.get(url)

json_data = loads(response.text)

# quote = (

# json_data[0]["q"] + " -" + json_data[0]["a"]

# ) # aligning the quote and it's author name in one string

quote = Quote(quote=json_data[0]["q"],

author=json_data[0]["a"]

)

return quote

if __name__ == "__main__":

print(generate_quote().display())

We define the publisher to automatically generate a quote every 7 seconds:

# ./fluvio_publisher.py

import time

from fluvio import Fluvio

from quote import generate_quote

TOPIC_NAME = "quote-daily"

PARTITION = 0

if __name__ == "__main__":

# Connect to cluster

fluvio = Fluvio.connect()

producer = fluvio.topic_producer(TOPIC_NAME)

while True:

quote = generate_quote()

quote_str = quote.display()

print(f"PUBLISHER: {quote_str}")

producer.send_string("{}: timestamp: {}".format(quote_str, quote.created_at))

time.sleep(7)

# Flush the last entry

producer.flush()

Although we call it pub/sub, which is publisher and subscriber, we may have a little bit of a change in name-calling. We would be the consumers instead. The logic for the consumer in this case is to continuously listen to the publisher.

# ./fluvio_consumer.py

from fluvio import Fluvio, Offset

TOPIC_NAME = "quote-daily"

PARTITION = 0

if __name__ == "__main__":

# Connect to cluster

fluvio = Fluvio.connect()

consumer = fluvio.partition_consumer(TOPIC_NAME, PARTITION)

while True:

# Consume last 1 records from topic

for record in consumer.stream(Offset.from_end(0)):

print(f"CONSUMER: {record.value_string()}")

Now is the moment of truth.

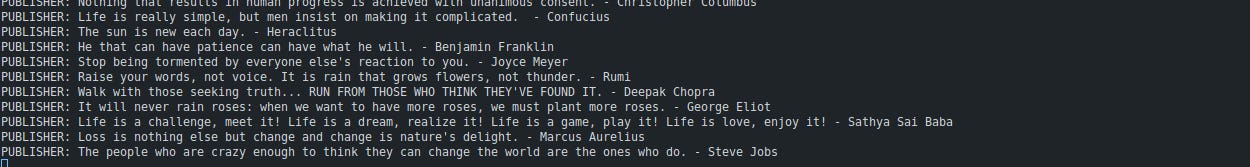

Running the Publisher with the command line: python fluvio_publisher.py . We get:

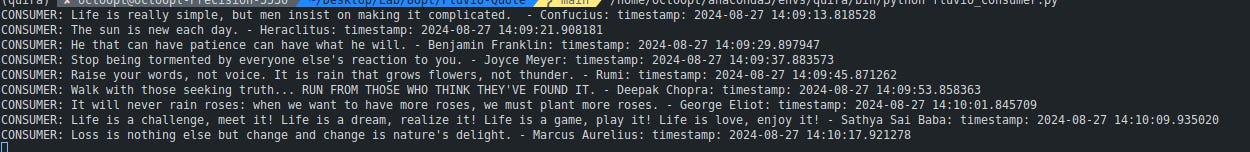

Doing the same thing to the Consumer: python fluvio_consumer.py . We get:

We have not built the message broker yet. The fun-toy experiment can be extended further by providing a database; we can use Redis. The flow can be described like this:

The publisher generates a quote and sends a message.

The consumer receives the message and requests the quote from the dataset.

The Redis get the quote and gives it to the Consumer.

Conclusion

In this article, we talked about one of the greatest architecture in programming: Pub/Sub, a fundamental component of event-driven architecture. It provides a robust and scalable foundation for event-driven architectures, enabling loosely coupled, asynchronous communication between components. In addition, we used Fluvio to demonstrate the architecture by allowing the publisher to generate quote every 7 seconds to the Consumer. Clearly, this framework provides us an easy approach to event-driven architecture.

If you guys want me to continue this approach in LLMs applications or develop it further,. You guys can comment to let me know!

Thank you for reading this article; I hope it added something to your knowledge bank! Just before you leave:

👉 Be sure to press the like button and follow me. It would be a great motivation for me.

👉 More details of the code refer to: Github